Have you checked your website's page indexing lately? If not, it's time to do so! Page indexing is crucial for search engine optimisation (SEO) and plays a vital role in determining your website's ranking.

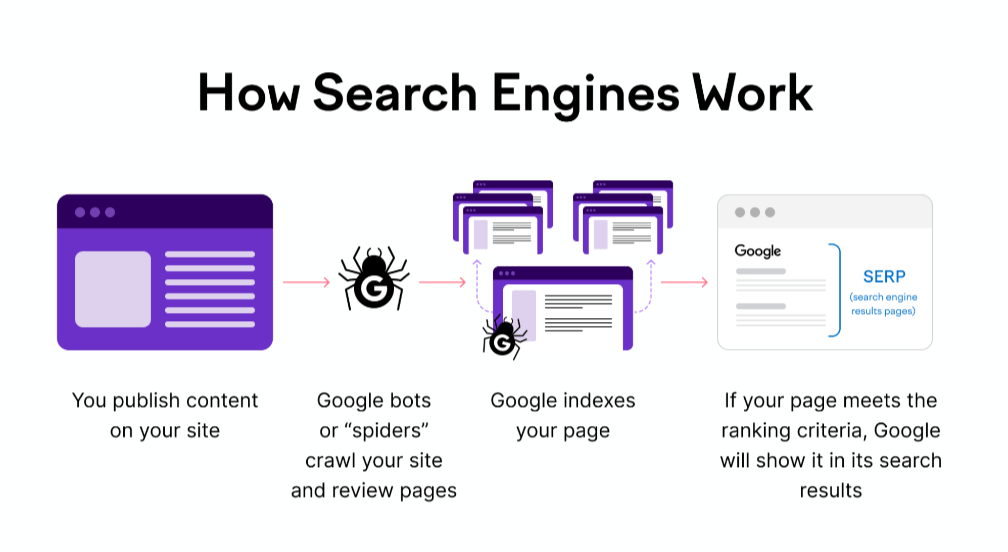

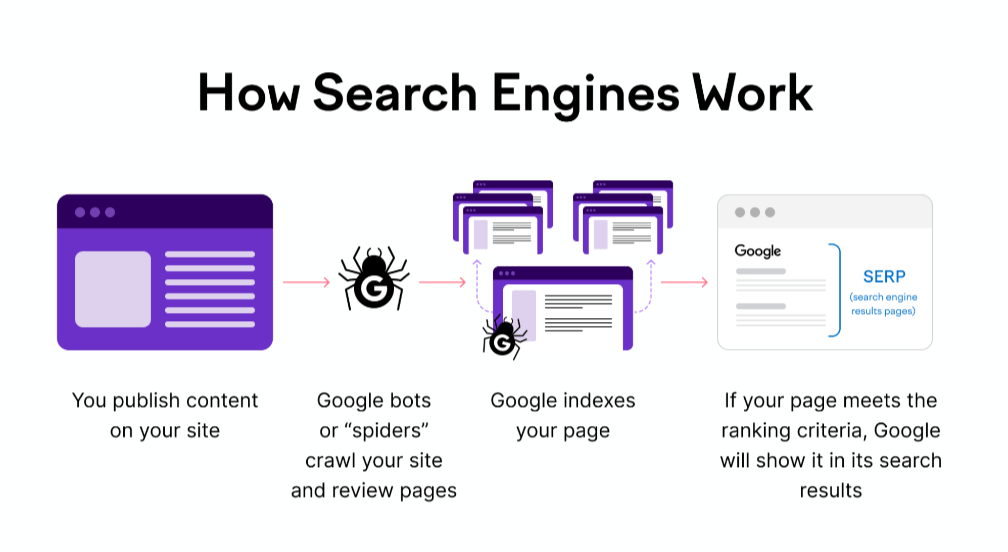

In simple terms, page indexing is the process of the search engines checking and adding your website/web pages to their databases. Therefore, if your website pages are not indexed, they won't appear on search engine result pages (SERPs), and it will negatively affect your website's traffic.

But the question is, why aren't your website pages indexed? In this blog post, we'll discuss most common 7 reasons why your pages aren’t indexed and how to fix them.

What is Page Indexing?

Page indexing is the process by which search engines like Google store and organize content from web pages for fast and accurate retrieval.

When a page is indexed, it becomes eligible to appear in search engine results, making it discoverable by the audience.

The absence of indexing means your content remains invisible to search engine users, no matter how valuable or relevant it might be.

The most effective tool to monitor your website's indexing status is Google Search Console.

This free tool provided by Google not only helps in tracking your site's performance in Google search but also provides critical insights into your site's indexing status. Two key features to leverage for monitoring page indexing:

-

Page Indexing Report

-

URL Inspection Tool.

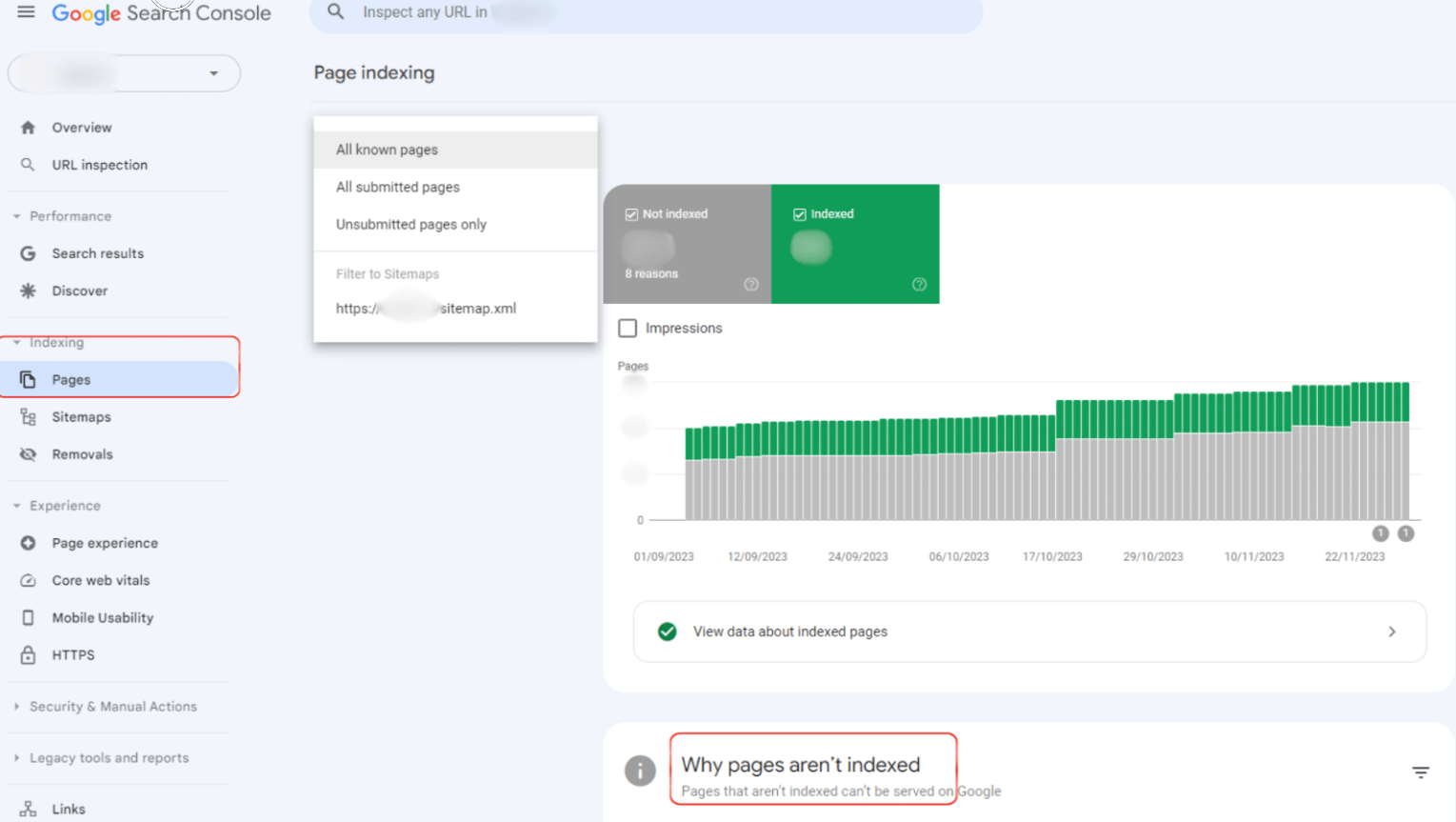

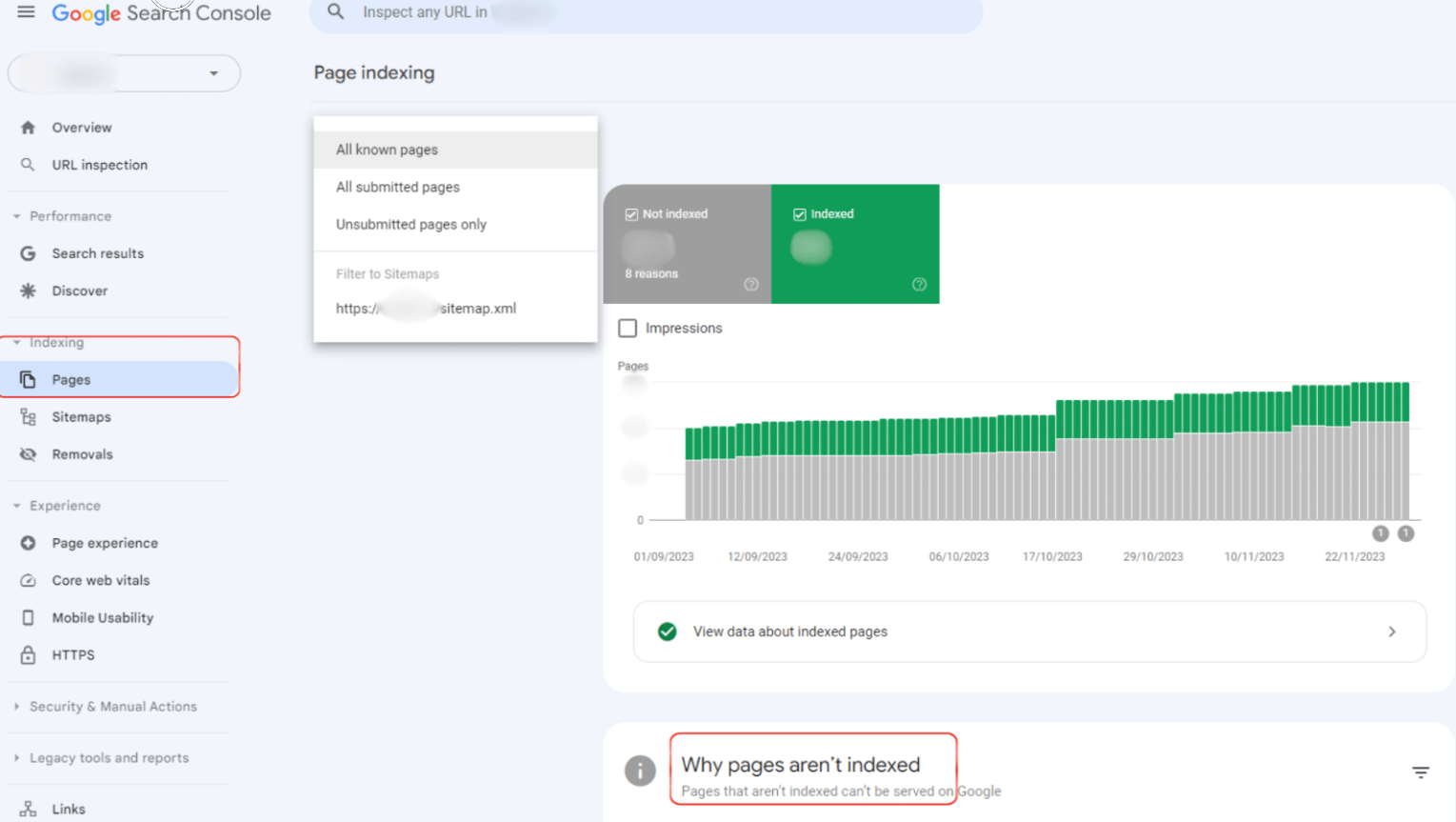

Page Indexing Report in Google Search Console

In Google Search Console, find the “Indexing” option in the sidebar and select the “Pages” tab. This leads you to the main interface for the Page Indexing Report.

The summary page presents a graph and a current count of indexed and non-indexed pages. What you want to see here is a steady increase in indexed pages, especially if you frequently publish new content. Sudden drops or spikes in this graph can be red flags, suggesting issues that need deeper investigation.

For the purpose of identifying and rectifying Search Console Indexing errors, concentrate on the “Why pages aren’t indexed table”. This will be your primary resource for pinpointing and addressing specific issues hindering the indexing of your pages.

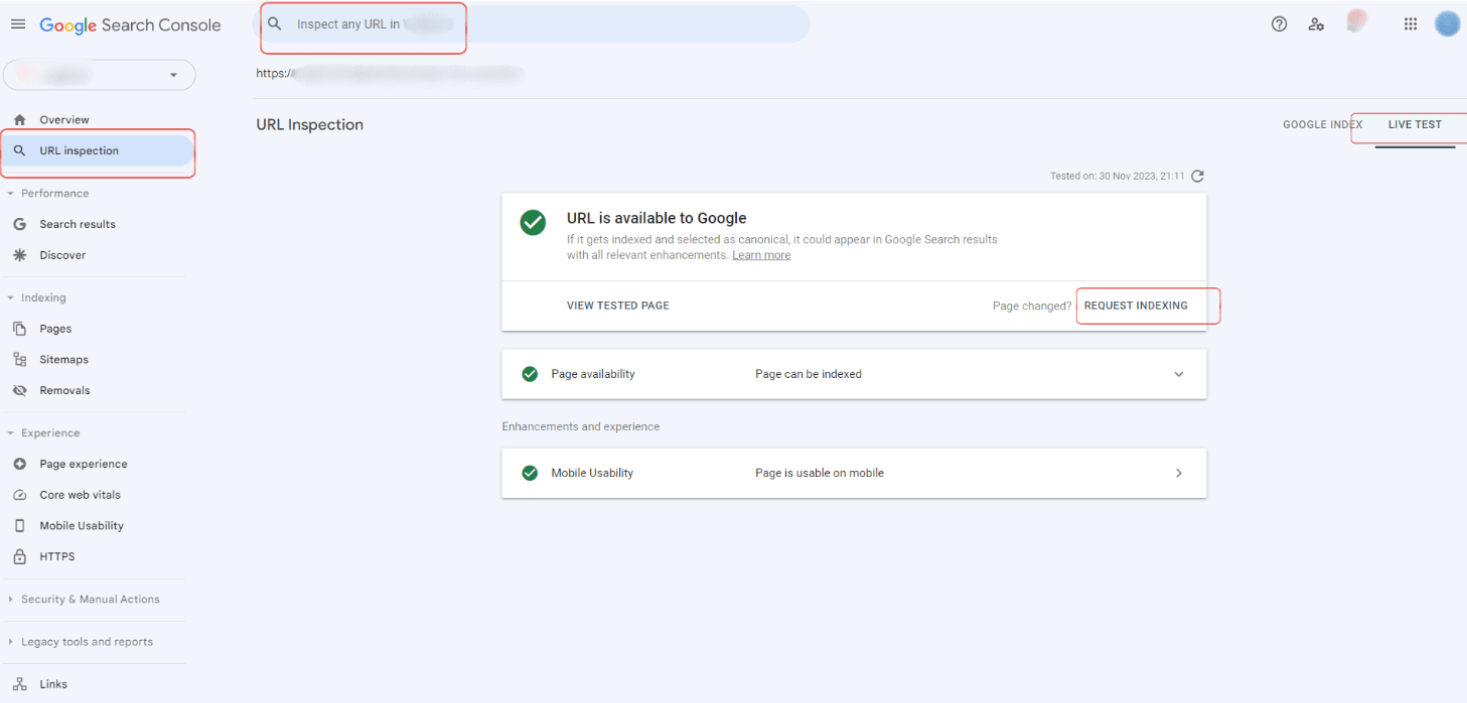

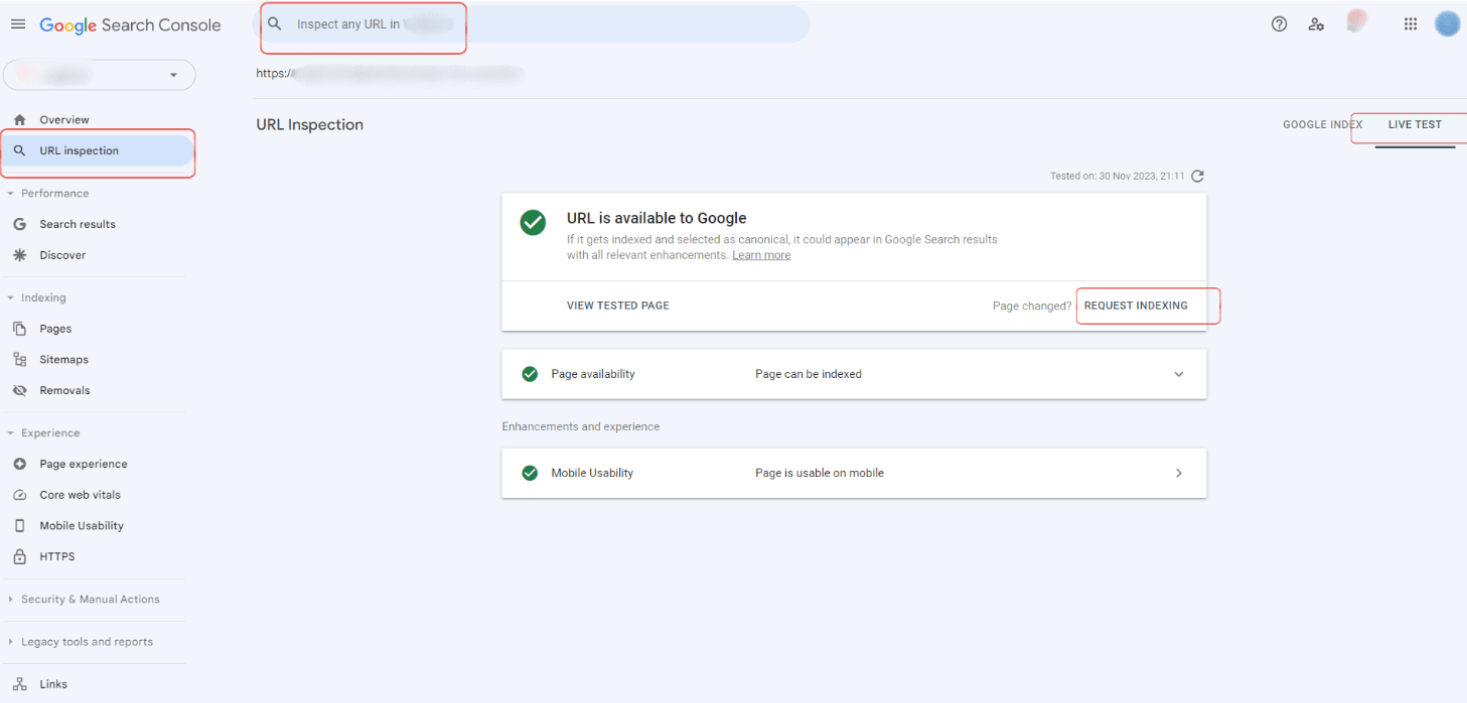

URL Inspection Tool in Google Search Console

The URL Inspection Tool in Google Search Console is particularly useful when you need to know a specific page's current indexing status and the reasons behind any indexing issues.

You can locate the URL Inspection Tool in the main header of the Google Search Console interface. Then, enter the URL of the page you wish to inspect into the tool and press Enter. This action initiates an analysis of the page.

The tool will display the current status of the page. It indicates whether the page is indexed, if the indexing status is pending, or if it's not indexed at all. If the page isn’t indexed, the tool will provide reasons for this. This information is crucial as it guides you in understanding common search indexing errors and the appropriate steps to resolve them.

7 Reasons Why Your Pages Aren’t Indexed

Here are the 7 most common issues that might prevent your pages from being indexed:

1. Server Errors (5xx)

When a server returns a 500-level error, it can prevent search engines from accessing and indexing the pages of your website. This issue can occur due to various server problems and has the potential to negatively impact your website's visibility on search engines.

The root cause of this problem often lies within server issues. If your server is encountering errors or malfunctions, it may become inaccessible to search engines, leading to indexing failures. These errors can appear as warning messages or explicit error codes in your server logs.

How to fix: It's crucial to collaborate with your web hosting provider or IT team. They can help identify the underlying server issues causing these errors. Regular monitoring of server logs can help preemptively identify these problems. Repairing server errors or upgrading your server can offer a quick solution to restore your website's accessibility and ensure successful indexing by search engines.

2. URL Blocked by Robots.txt

Your website may face issues with search engine indexing due to the accidental blocking of search engine crawlers in your robots.txt file. This error is often a result of website development oversights and can significantly impact your website's visibility online.

The root of this problem lies within your website's robots.txt file. Certain lines in this file may unintentionally prevent search engines from crawling and thus indexing specific pages on your site.

How to fix: You need to review and adjust your robots.txt file, ensuring that it permits search engines to crawl and index important pages. You can leverage tools such as Google Search Console's robots.txt tester for validation. By removing or modifying the lines that block crawlers, you can restore the accessibility of your website to search engines, leading to successful indexing.

3. Redirect Error

Experiencing indexing issues on your website could be a result of faulty redirect behaviour, such as extended redirect chains or loops. These can create roadblocks for search engine crawlers, preventing them from accessing and indexing your web pages.

The problem arises from the improper configuration of redirects on your website. Overly long redirect chains can confuse search engine crawlers, and redirect loops can trap them, leading to unsuccessful indexing. Essentially, when redirects are not simplified and do not lead to the correct, live pages, it disrupts the efficient crawling and indexing process of search engines.

How to fix: It's necessary to review and streamline your website's redirect chains. Ensure that all redirects are properly configured to lead to the appropriate, live pages, allowing search engine crawlers easy access to all your site's content. Utilizing tools like Lighthouse can greatly assist in identifying and rectifying redirect issues. By simplifying your redirect chains and eliminating loops, you can improve your website's accessibility and ensure successful indexing by search engines.

4. URL Marked ‘Noindex’

The 'noindex' command explicitly tells search engines not to index the specified page, reducing your website's visibility in search results.

The 'noindex' directive can be located in a page’s meta tags or HTTP headers. They are often used intentionally to keep certain pages private or hidden from search engine results. However, if applied incorrectly, they can accidentally harm the indexing of important pages, negatively affecting your website's search engine optimisation.

How to fix: You need to review the meta tags or HTTP headers of your website's pages and identify any 'noindex' directives. If you find such directives on pages you want indexed, promptly remove them. By doing so, you ensure search engines can crawl and index all relevant content on your website.

5. Soft 404 Errors

Soft 404 errors occur when a page displays 'not found' content to users and search engine crawlers, but it doesn't return an HTTP 404 status code, which signifies the page does not exist.

This happens when a page is incorrectly configured to display 'not found' content without the corresponding 404 status code. This confuses search engine crawlers as they cannot accurately determine the page's existence, leading to indexing problems and potentially affecting your website's SEO performance.

How to fix: Ensure that pages either deliver the correct content or return a proper 404 status code when the content is not available. You can do this by reviewing your website's pages for any that display 'not found' messages and checking their HTTP status codes. If you find any soft 404 errors, you should either update these pages with the appropriate content or ensure they return a correct 404 status code.

6. Unauthorised (401) and Forbidden Access (403) Errors

Pages that require authorisation or are forbidden can often be overlooked by search engines, leading to these pages not being indexed and, consequently, not appearing in search results.

These errors occur when certain pages on your website are configured to require user authorisation (401 errors) or are explicitly forbidden (403 errors). When search engine crawlers encounter these restrictions during their crawl process, they are unable to access and index the content on these pages. This leads to an incomplete indexing of your websit.,

How to fix: You need to review your server settings and adjust them to make these pages publicly accessible if they contain content intended for indexing. You should carefully analyse which pages are returning 401 or 403 errors and determine if these pages should indeed be accessible to search engine crawlers. If they should, adjusting your server settings to remove these restrictions will allow search engines to fully index your website.

7. Duplicate Content

When search engines detect identical content on different pages of your website or across multiple websites, they often choose not to index these pages to avoid redundancy in search results.

This problem arises when your website contains pages with substantially similar or identical content. Search engines prioritise unique and relevant content to provide users with a diverse range of information. Therefore, pages with duplicate content are viewed as less valuable and may be overlooked during the indexing process.

How to fix: Ensure that each page on your website offers unique and valuable content. You can use tools like Copyscape to scan your website for duplicate content and remove or rephrase it accordingly. If removal or rewriting is not feasible, particularly in cases where duplicate content is necessary, you can utilise canonical tags. These tags allow you to specify which version of the content you prefer to be indexed by search engines.

Conclusion

Page indexing is crucial for website visibility and ranking. If you're experiencing issues with page indexing, don't worry. There are always ways to resolve them. In this blog post, we discussed seven reasons why your website pages aren't indexed and how to fix them.

By following these steps, your website pages will be indexed effectively, and you'll notice a significant improvement in your website rankings and traffic. Remember to check your page indexing regularly and use tools such as Google Search Console to track your progress.

Let Cogify Simplify Your SEO

Interested in elevating your website's SEO performance? Contact Cogify today to learn more about how our services can transform your website's search engine presence.